The U.S. Army is dabbling in incorporating AI chatbots into its strategic plans, albeit within the scope of a war game simulation based on the popular video game StarCraft II.

this studyled by the U.S. Army Research Laboratory, analyzes OpenAI’s GPT-4 Turbo and GPT-4 Vision battlefield strategies.

This is part of OpenAI. We have been collaborating with the Department of Defense (DOD) since the creation of the Generative AI Task Force (TF) last year.

The use of AI on the battlefield is A heated debate ensued.with Recent similar research on AI wargaming We found that LLMs like GPT-3.5 and GPT-4 tend to escalate diplomatic tactics, sometimes leading to nuclear war.

This new study from the U.S. Army used Starcraft II to simulate a battlefield scenario involving a limited number of military units.

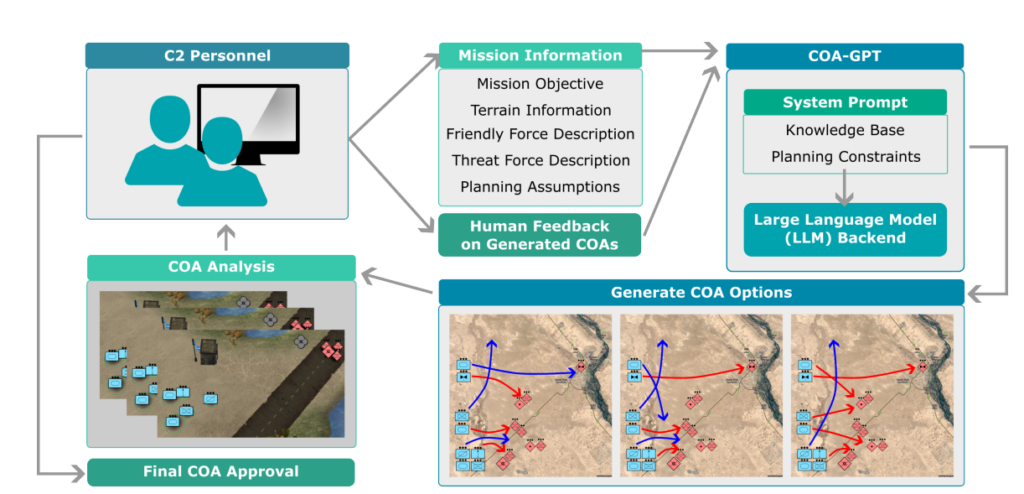

The researchers called this system “COA-GPT.” COA stands for “Courses of Action,” a military term that essentially describes military tactics.

The COA-GPT served as an aide to the military commander, tasked with devising strategies to eliminate enemy forces and capture strategic points.

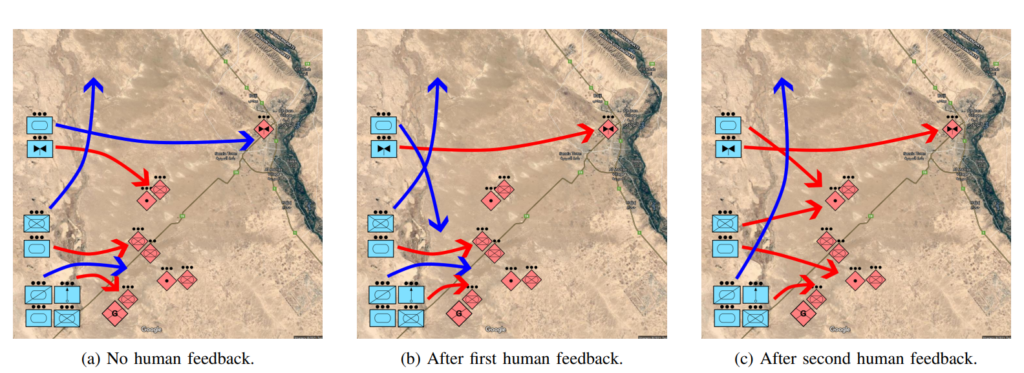

Researchers note that traditional COAs are notoriously slow and labor-intensive. COA-GPT integrates human feedback into the AI’s learning process, making decisions in seconds.

COA-GPT is superior to other methods, but it comes at a cost.

COA-GPT showed better performance than existing methods and outperformed existing methods in generating strategic COAs. Adapt to real-time feedback.

But it had a flaw. In particular, COA-GPT caused more casualties when mission objectives were achieved.

“We observed that COA-GPT and COA-GPT-V resulted in higher friendly casualties compared to other baselines, even when augmented with human feedback,” the study states.

Does this hinder researchers? I don’t think so.

According to the study, “In conclusion, COA-GPT represents a revolutionary approach to military C2 operations, fostering faster, more agile decision-making and maintaining strategic advantage in modern warfare.”

It is concerning to define an AI system that has caused more unnecessary casualties than the baseline as a ‘transformative approach’.

Department of Defense Although we have already identified other ways to explore military applications of AI, there are concerns about the readiness and ethical implications of the technology.

For example, who is responsible when you join the military? AI application gone wrong? developer? manager? Or is there someone further down the chain?

AI warfare systems have already been deployed in Ukraine and the Palestinian-Israeli conflict, but these questions remain untested.

I hope it stays the same.