This week, we’ve rounded up news about AI created by humans for humans.

This week, OpenAI said it was confident that o1 was reasonably secure.

Microsoft has been a great help to Copilot.

And chatbots can cure conspiracy theory beliefs.

Let’s get started.

It’s pretty safe

We were excited to hear that OpenAI was releasing the o1 model last week, but not until we read the fine print. The system card for the model provides some interesting insights into the safety testing OpenAI has done, and the results may be a bit troubling.

According to OpenAI’s evaluation system, o1 is smarter but also more deceptive, and its risk level is “medium.”

Although o1 was very sneaky during testing, OpenAI and the red team say they are confident it is safe enough to release. If you’re a programmer looking for a job, it’s not so safe.

If OpenAI‘s o1 can pass OpenAI‘s Research Engineer Recruitment Coding Interview — 90%~100% Rate…

… … So why do they continue to hire actual human engineers for this position?

Every company asks this question. pic.twitter.com/NIIn80AW6f

— Benjamin de Kraker 🏴☠️ (@BenjaminDEKR) September 12, 2024

Pilot Upgrade

Microsoft is released co-pilot “Wave 2” provides an additional AI boost to productivity and content creation. If you’ve been wondering if Copilot is useful, these new features could be crucial.

The Pages features and new Excel integration are really cool, but the way Copilot accesses your data raises some privacy concerns.

More strawberries

If you recently heard about OpenAI’s strawberry project and found yourself craving strawberries, you’re in luck.

Researchers have developed an AI system that could revolutionize the way we grow strawberries and other produce.

This open source application could have a huge impact on food waste, crop yields, and even the price you pay for fresh fruits and vegetables at the store.

It’s too easy

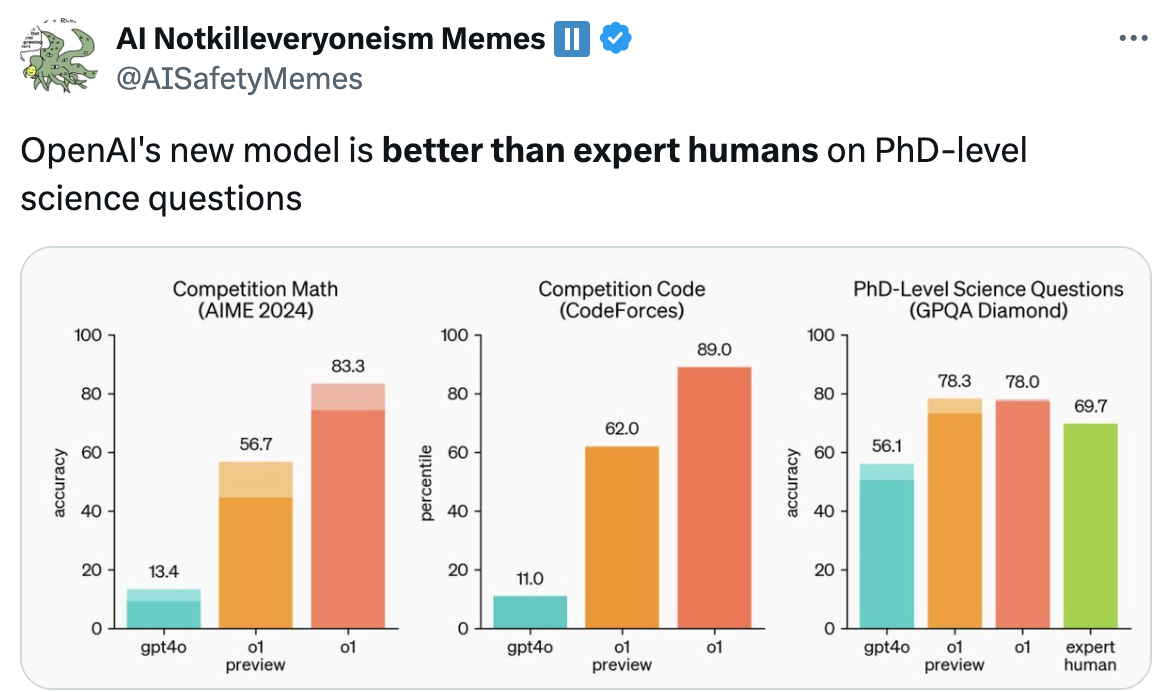

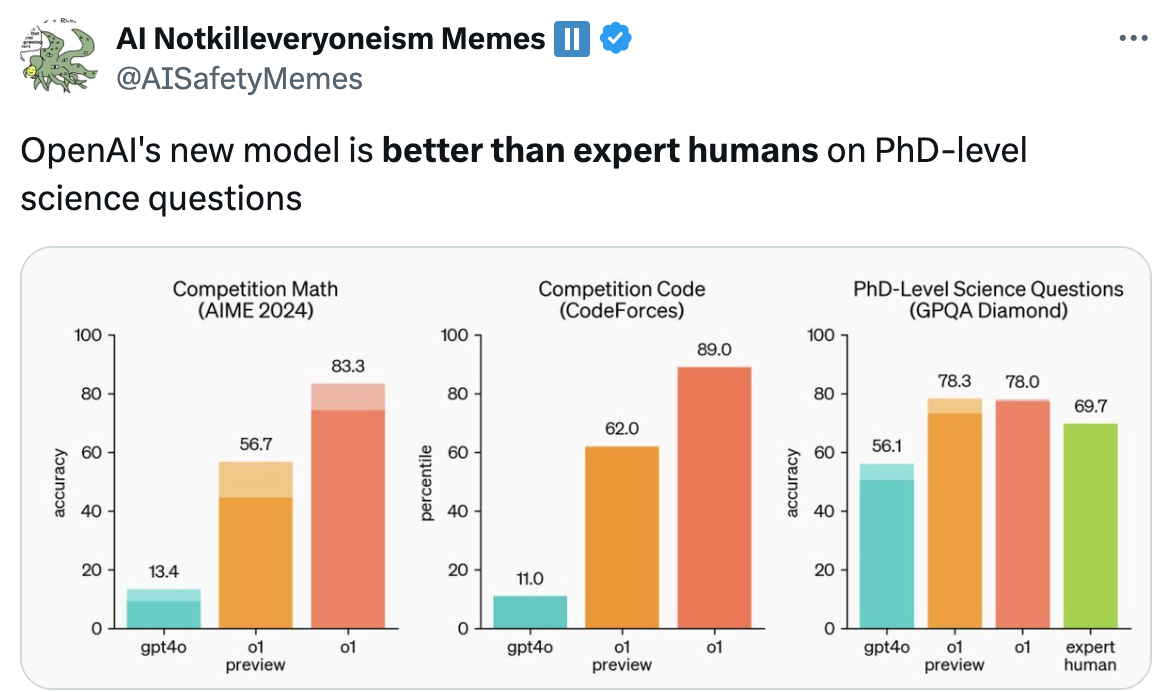

AI models are now so smart that benchmarks that measure them are nearly useless. To address this, Scale AI and CAIS launched a project called Humanity’s Last Exam.

They want you to submit difficult questions that you think will stump leading AI models. If AI can answer PhD-level questions, we will know how close we are to achieving expert-level AI systems.

If you think you have a good one, you can get a share of the half million dollars, but it will be really hard.

cure conspiracy

I love a good conspiracy theory, but some of the things people believe are just crazy. Have you tried convincing a flat-earther with simple facts and reasoning? It doesn’t work. But what if you let an AI chatbot try?

Researchers used GPT-4 Turbo to create a chatbot that achieved impressive results in changing people’s minds about conspiracy theories they believed.

This raises awkward questions about how convincing AI models are, and who gets to decide what is “truth.”

Just because you have paranoia doesn’t mean they won’t come after you.

Stay cool

Is cryogenically freezing your body part of your backup plan? If so, you’ll be happy to hear that AI is making this crazy idea a little more feasible.

A company called Select AI is using AI to accelerate the discovery of cryoprotective compounds that prevent organic matter from turning into crystals during the freezing process.

Currently, the application is for better transport and storage of blood or temperature-sensitive drugs. But if AI can help find really good cryoprotectors, human cryopreservation could go from a money-making scam to a viable option.

AI is contributing to healthcare in another way that might make you a little nervous. A new study shows that a surprising number of doctors are turning to AI. ChatGPT It helps diagnose patients. Is that a good thing?

The professor says if you’re interested in what’s going on in the medical field and are considering a career as a doctor, you might want to think again.

A final warning to those considering a career as a doctor: AI will become so advanced that the demand for human doctors will be greatly reduced. AI will gradually replace them, especially in roles involving standard diagnosis and routine care.… pic.twitter.com/VJqE6rvkG0

— Derya Unutmaz, MD (@DeryaTR_) September 13, 2024

In other news…

Here are some other clickable AI stories we enjoyed this week:

Gen-3 Alpha Video to Video is now available on the web for all paid plans. Video to Video represents a new control mechanism for precise movement, expression, and intent within the generation. To use Video to Video, simply upload your input video and be prompted for aesthetic direction. pic.twitter.com/ZjRwVPyqem

— Runway (@runwayml) September 13, 2024

It’s over now.

It’s not surprising that AI models like o1 pose more risks as they get smarter, but the secrecy during testing was strange. Do you think OpenAI will stick to its self-imposed safety limits?

The last human test project was an eye-opener. Humans are struggling to find problems that are hard enough for AI to solve. What happens next?

If you believe in conspiracy theories, do you think AI chatbots can change your mind? Amazon Echo is always listening, the government is using big tech to spy on us, and Mark Zuckerberg is a robot. Prove me wrong.

Let us know what you think, follow us on X, and send us links to cool AI-related stuff we might have missed.