The phrase “practice makes perfect” is usually used only for humans, but it’s also a good adage for robots newly deployed in unfamiliar environments.

Imagine a robot arriving at a warehouse. The robot comes packaged with a skill that it has been trained to do, such as placing items, and now it has to pick up items from an unfamiliar shelf. At first, it is difficult because the machine has to get used to the new environment. To improve, the robot needs to understand the skill that needs improvement within the overall task, and then specialize (or parameterize) that behavior.

While humans can program robots to optimize their performance in the field, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and AI Lab have developed a more effective alternative. Their “estimate, extrapolate, localize” (EES) algorithm, presented last month at the Robotics: Science and Systems conference, could help these machines train themselves to improve useful tasks in factories, homes, and hospitals.

Assess the situation

To help robots get better at activities like floor cleaning, EES uses a vision system to find and track the machine’s surroundings. The algorithm then estimates how reliably the robot is performing a task (e.g., cleaning) and whether it deserves more practice. EES predicts how well the robot will perform the entire task once it has refined a particular skill, and then finally practices it. The vision system then checks whether the skill was performed correctly after each attempt.

EES could be useful in places like hospitals, factories, homes, or coffee shops. For example, if you want a robot to clean a living room, it would need help practicing the skill of cleaning. But according to Nishanth Kumar SM ’24 and his colleagues, EES could help the robot improve without human intervention after just a few practice trials.

“When we started this project, we wondered whether this specialization could be achieved on a real robot with a reasonable amount of samples,” said Kumar, a CSAIL affiliate and a PhD student in electrical engineering and computer science who co-authored a paper describing the work. “Now, we have an algorithm that can allow a robot to meaningfully improve a particular skill with tens or hundreds of data points in a reasonable amount of time—an upgrade from the thousands or millions of samples required by standard reinforcement learning algorithms.”

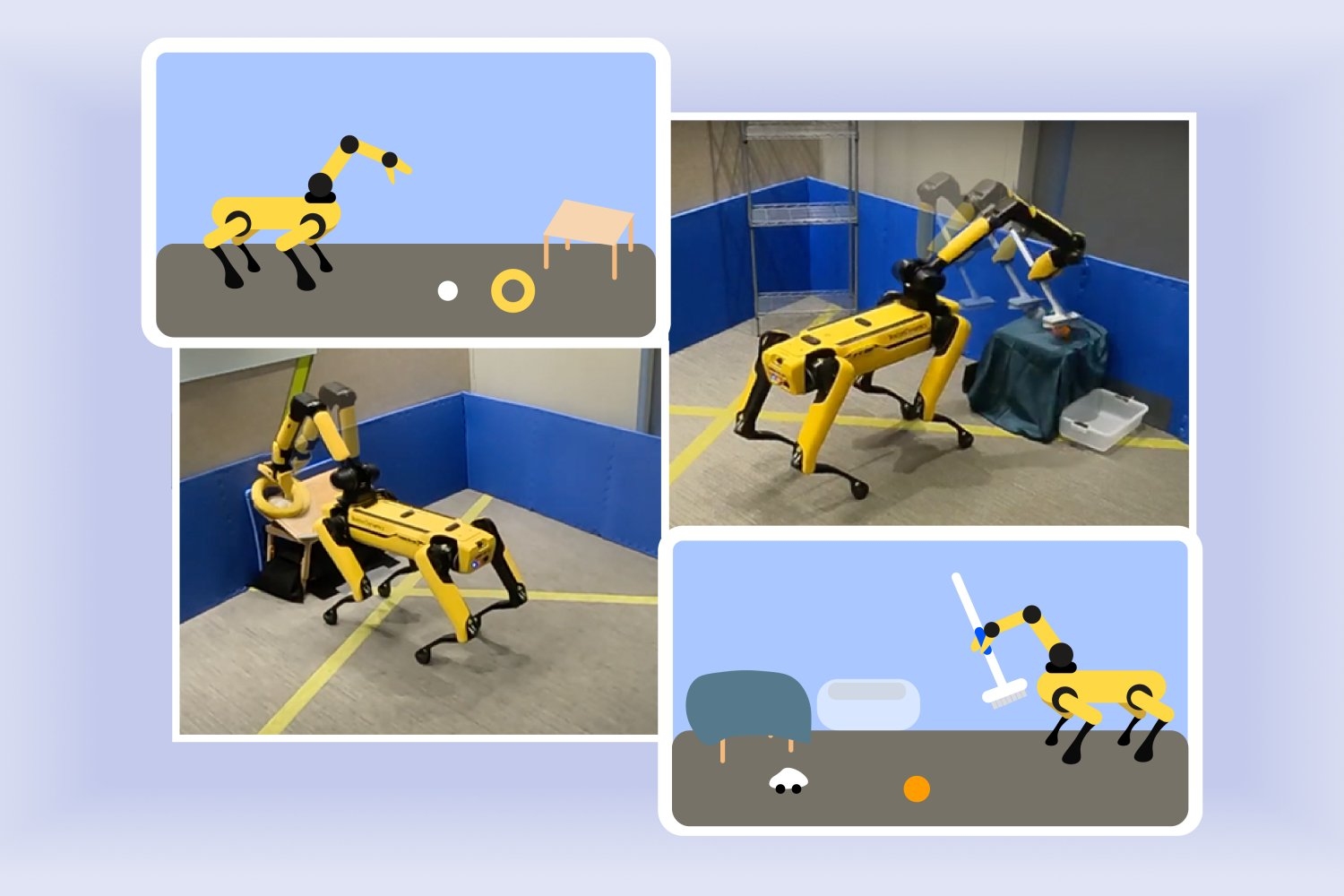

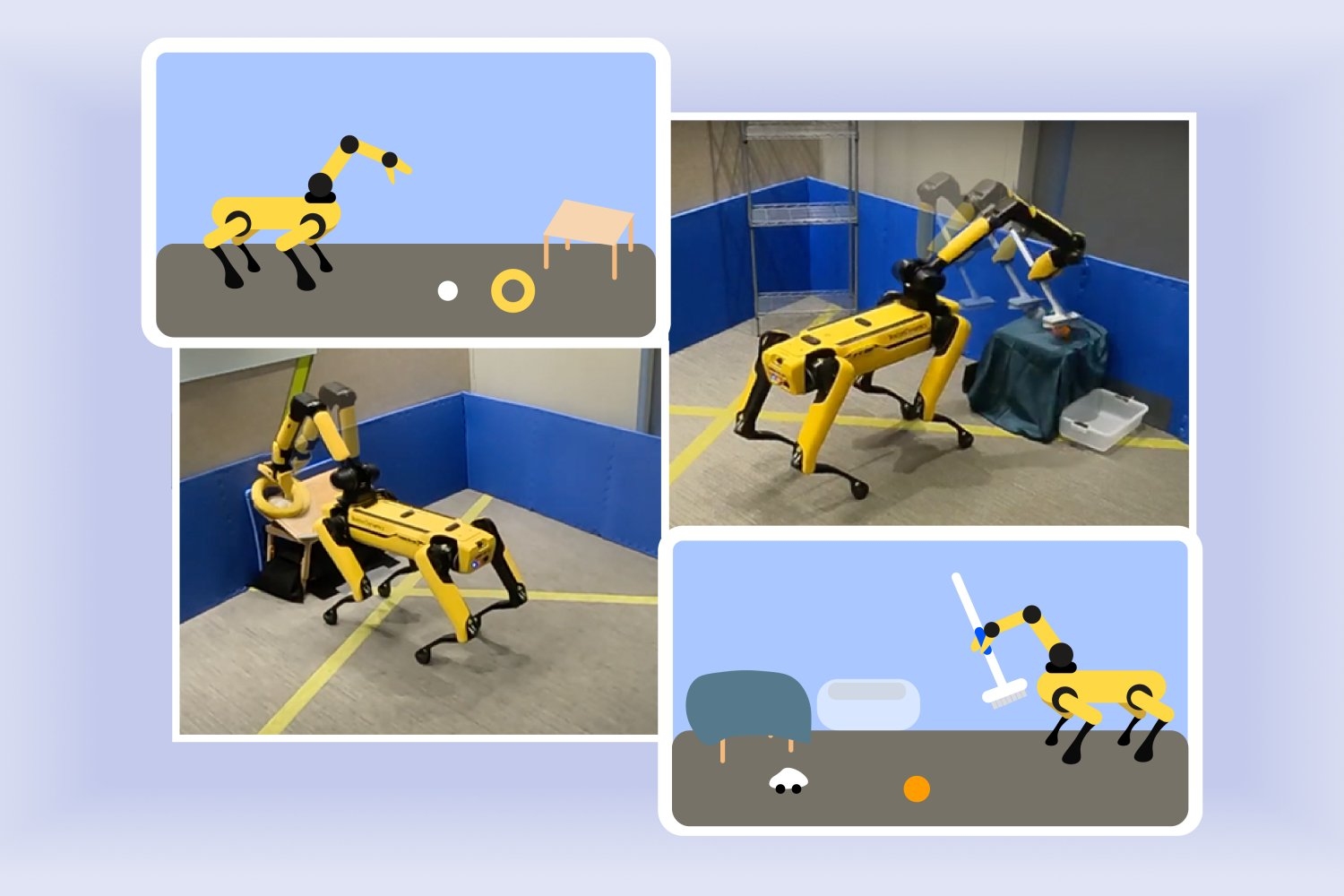

See Spot Sweep

The efficient learning ability of EES was clearly demonstrated when implemented on Boston Dynamics’ Spot quadruped robot during a research experiment at the AI Lab. The back-armed robot completed manipulation tasks after several hours of practice. In one demonstration, the robot learned how to safely place a ball and ring on an inclined table in about three hours. In another demonstration, the algorithm guided the machine to improve the task of sweeping toys into a trash can in about two hours. Both results appear to be upgrades from previous frameworks, which likely took more than 10 hours per task.

“We aimed to have the robot gather its own experience so that it can better choose the right strategy for deployment,” said co-lead author Tom Silver SM ’20, PhD ’24, an electrical engineering and computer science (EECS) alumnus and CSAIL affiliate who is now an assistant professor at Princeton University. “We wanted to answer a key question by focusing on what the robot knows: What are the most useful skills in its skill library for the robot to practice right now?”

EES could eventually help streamline autonomous robot practice in new deployment environments, but for now, it has some limitations. For one, they used a table that was low to the ground, which made it easier for the robot to see the objects. Kumar and Silver also 3D-printed an attachable handle that made it easier for Spot to grab the brush. The robot failed to detect some items and identified objects in the wrong locations, which the researchers considered failures.

Give the robot homework

The researchers note that with the help of the simulator, the pace of physical experimentation can be further accelerated. Instead of each skill being worked on physically independently, the robot could eventually combine real and virtual practice. They want to engineer EES to overcome the imaging delay experienced by the researchers, reducing latency and making the system faster. In the future, instead of planning which skills to refine, they could investigate algorithms that infer the sequence of practice attempts.

“Enabling robots to learn on their own is both incredibly useful and incredibly challenging,” says Danfei Xu, an assistant professor in Georgia Tech’s School of Conversational Computing and a research scientist at NVIDIA AI. He was not involved in this work. “In the future, we expect to see home robots in all sorts of homes, performing a wide range of tasks. We can’t program everything they need to know in advance, so we need to make sure that they can learn on the job. But leaving robots to explore and learn without guidance can be very slow and can have unintended consequences. The work by Silver and his colleagues introduces algorithms that allow robots to autonomously practice skills in a systematic way. This is a huge step forward in creating home robots that can continuously evolve and improve on their own.”

Silver and Kumar’s co-authors are AI Institute researchers Stephen Proulx and Jennifer Barry, and four CSAIL members: Northeastern University PhD student and visiting scholar Linfeng Zhao, MIT EECS PhD student Willie McClinton, and MIT EECS professors Leslie Pack Kaelbling and Tomás Lozano-Pérez. Their research was supported by the AI Institute, the US National Science Foundation, the US Air Force Office of Scientific Research, the US Office of Naval Research, the US Army Research Office, and MIT Quest for Intelligence, and used high-performance computing resources at MIT SuperCloud and the Lincoln Laboratory Supercomputing Center.